VMMU: A Vietnamese Multitask Multimodal Understanding and Reasoning Benchmark

VMMU: A Vietnamese Multitask Multimodal Understanding and Reasoning Benchmark

We introduce VMMU, a Vietnamese Multitask Multimodal Understanding and Reasoning Benchmark with 2.5k multimodal questions across 7 tasks. Despite strong Vietnamese OCR performance, proprietary models achieve only 66% mean accuracy. Analysis shows the primary sources of failure are multimodal grounding and reasoning over text and visual evidence, rather than OCR.

Vision Language Models (VLMs) have made rapid progress on multimodal benchmarks, demonstrating strong performance on complex visual and textual reasoning tasks in English. However, it remains unclear how well these models perform in low-resource languages, especially when language understanding, visual grounding, and reasoning are all required to complete a task.

We introduce VMMU, a Vietnamese Multitask Multimodal Understanding and Reasoning Benchmark designed to evaluate how vision language models interpret and reason over visual and textual information beyond English. VMMU consists of 2.5k multimodal questions across 7 tasks, covering a diverse range of problem contexts, including STEM problem solving, data interpretation, rule-governed visual reasoning, and abstract visual reasoning.

All questions require genuine multimodal integration, rather than reliance on text-only cues or OCR-based shortcuts. We evaluate a diverse set of state-of-the-art (SOTA) proprietary VLMs on VMMU and find that despite strong Vietnamese OCR performance, proprietary models achieve only 66% mean accuracy. Further analysis shows that the primary sources of failure are not OCR, but instead multimodal grounding and reasoning over text and visual evidence.

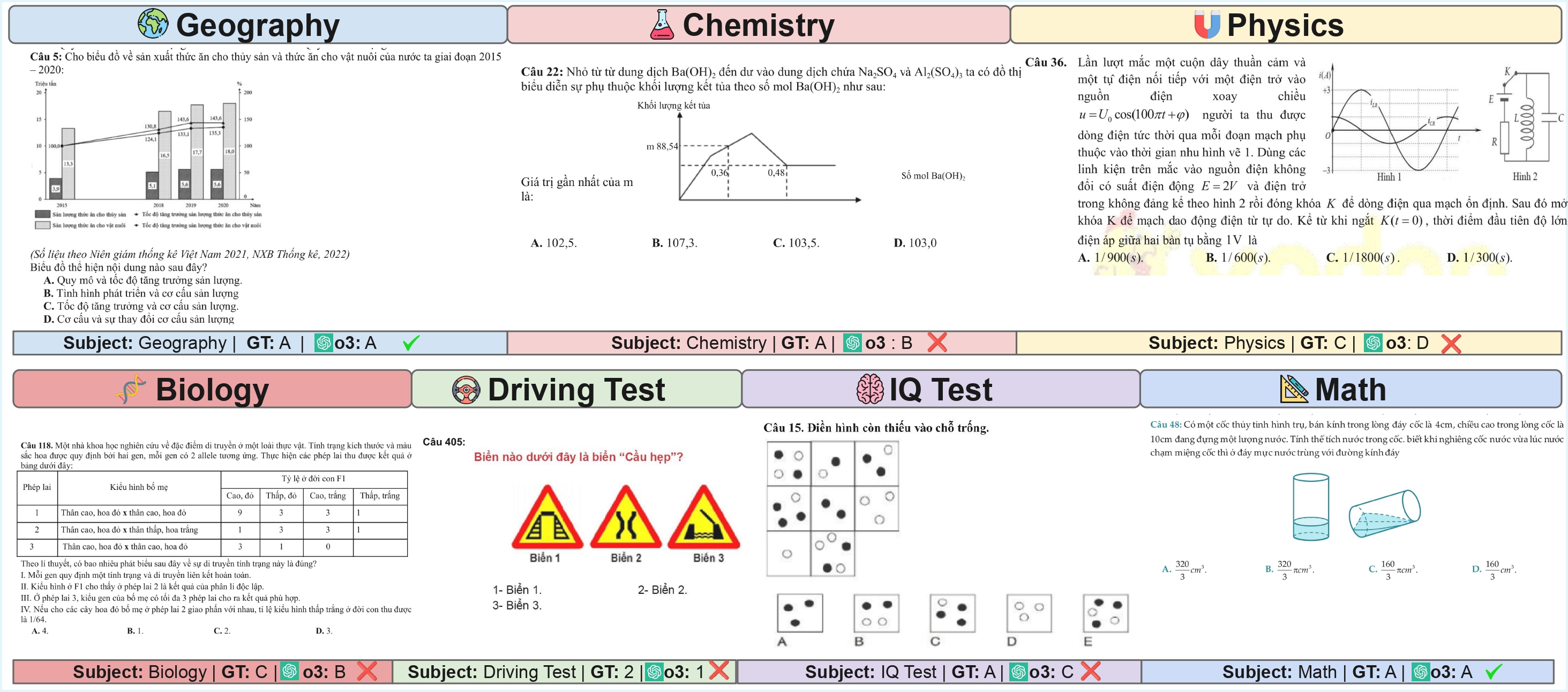

Example of a Vietnamese multimodal exam question from ViExam, combining text and visual elements.

Non-thinking proprietary VLMs achieve only 50-71% accuracy on VMMU, while thinking VLMs perform significantly better at 73-86%. This indicates substantial room for improvement in Vietnamese multimodal understanding.

Across 5 proprietary SOTA VLMs, Vietnamese embedded-text transcription is consistently strong (mean BLEU 89.01%, F1 94.30%, CER 6.59%, WER 9.33%). Most remaining errors are attributable to multimodal grounding and downstream reasoning, not OCR failures.

When the question and options are given as text instead of being rendered within the image (Split-MM), accuracy increases for every model (+6 percentage points on average), showing that separating text from visual evidence reduces interference and improves multimodal reasoning.

Translating the Vietnamese text into English consistently reduces accuracy for all models (-2 percentage points on average). This suggests that Vietnamese language understanding is not the primary bottleneck, and translation introduces mismatches that degrade grounded multimodal reasoning.

When visual evidence is removed, accuracy drops significantly (-21.27 points); yet VLMs still perform above random chance (25.92%), suggesting substantial reliance on text-only priors and exam heuristics rather than genuine visual grounding.

Questions that truly require image understanding are significantly more challenging than text-sufficient questions, demonstrating the benchmark's focus on visual reasoning.

Shuffling answer options has a minor impact on model performance, indicating models are not heavily reliant on answer position biases.

Running evaluations multiple times does not change model rankings or main conclusions, confirming the robustness of the benchmark results.

Vision-language models without reasoning capabilities tend to be overconfident in their incorrect predictions.

State-of-the-art vision-language models consistently follow the required answer format, demonstrating strong instruction-following capabilities.

Open-source vision-language models show substantial performance gaps compared to proprietary models on VMMU benchmark tasks.

The primary weakness of open-source VLMs is their inferior embedded-text OCR capabilities, which significantly impacts overall performance.

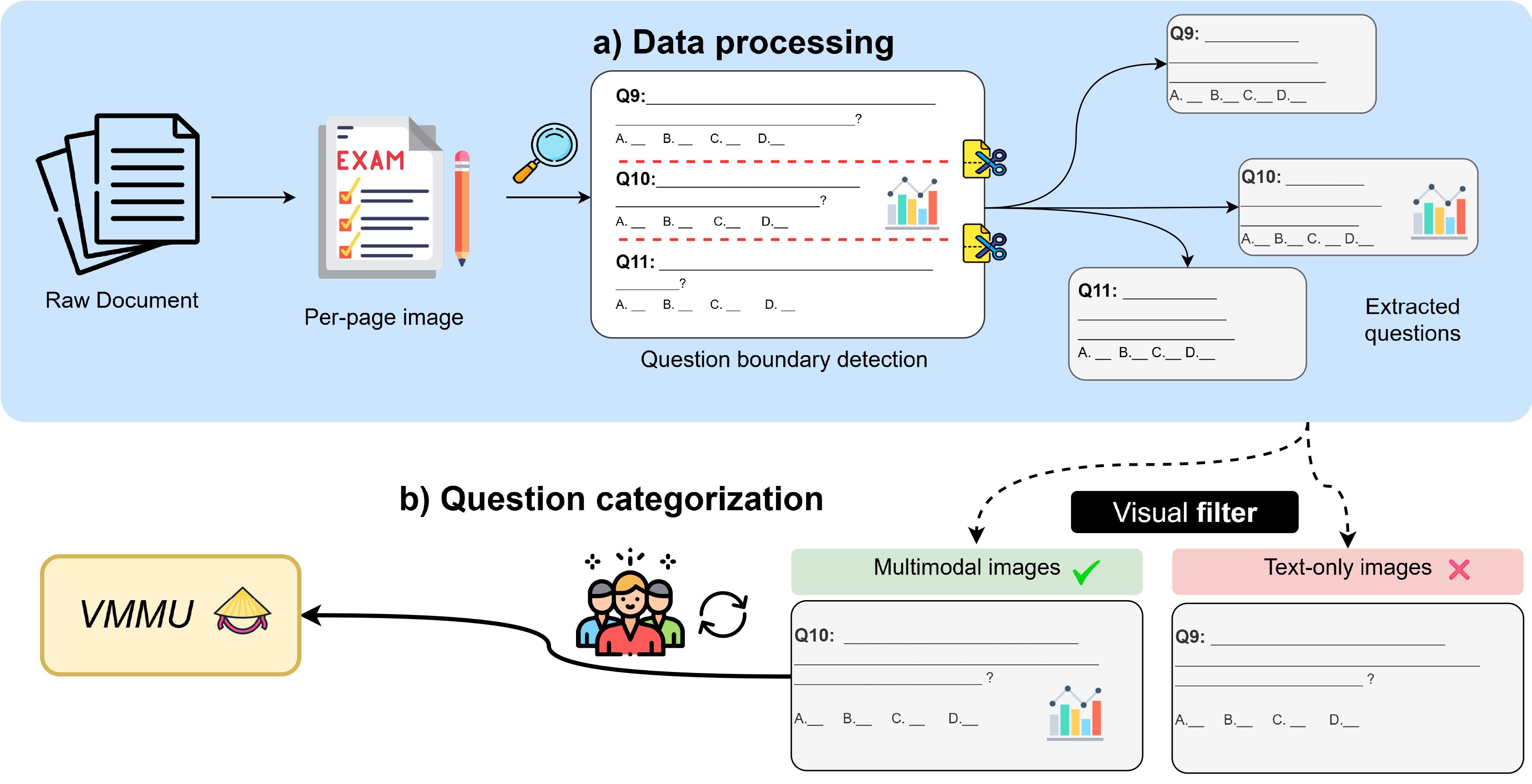

To ensure the quality and validity of our benchmark, we developed a systematic 3-stage pipeline to collect, filter, and classify exam questions. This process was crucial for building a dataset that genuinely tests multimodal reasoning, moving beyond simple text-only questions found in previous benchmarks.

Stage 1: Data Sourcing & Conversion. We began by automatically crawling thousands of exam papers from popular Vietnamese educational websites. Each paper was then converted into high-resolution images, preparing them for analysis.

Stage 2: Automated Classification. Next, our custom pipeline used OCR to identify individual questions on each page. A specialized analyzer then scanned the content of each question, automatically distinguishing multimodal (image-based) questions from text-only ones.

Stage 3: Human Verification. Finally, to ensure maximum accuracy, every single question was manually reviewed by a team of native Vietnamese speakers. They used a custom-built tool to correct any classification errors and validate the final dataset.

Our ViExam benchmark features 2,548 questions across 7 diverse domains.

456 Questions

361 Questions

302 Questions

341 Questions

481 Questions

367 Questions

240 Questions

| Model | a. Math | b. Physics | c. Chemistry | d. Biology | e. Geography | f. Driving | g. IQ | Mean |

|---|---|---|---|---|---|---|---|---|

| Human (Average) | 64.50 | 66.70 | 66.80 | 62.80 | 71.90 | – | – | 66.54 |

| Human (Best) | 98.00 | 100.0 | 100.0 | 100.0 | 100.0 | – | – | 99.60 |

| Random baseline | 25.00 | 24.66 | 24.38 | 24.67 | 25.00 | 33.24 | 24.46 | 25.92 |

| Open-source VLMs | ||||||||

| Aya-Vision-8B | 10.75 | 8.03 | 3.64 | 3.23 | 2.49 | 26.98 | 12.50 | 9.66 |

| Aya-Vision-32B | 12.94 | 14.40 | 16.89 | 17.30 | 21.41 | 32.97 | 24.58 | 20.07 |

| Gemma-3-4B | 26.32 | 17.45 | 22.19 | 22.29 | 27.65 | 40.60 | 21.67 | 25.45 |

| Gemma-3-27B | 47.37 | 30.47 | 38.74 | 30.79 | 47.40 | 43.87 | 35.00 | 39.09 |

| Qwen-2.5-VL-32B | 61.18 | 49.17 | 44.70 | 42.82 | 71.31 | 54.50 | 41.25 | 52.13 |

| Qwen-2.5-VL-72B | 58.33 | 49.86 | 48.34 | 40.47 | 64.66 | 53.95 | 46.67 | 51.75 |

| Llama-4-Scout | 59.65 | 44.88 | 45.03 | 38.71 | 69.02 | 49.05 | 37.08 | 49.06 |

| Llama-4-Maverick | 42.54 | 29.36 | 32.78 | 27.57 | 53.22 | 53.95 | 29.17 | 38.37 |

| Mistral-Small-3.2-24B | 34.65 | 30.28 | 28.48 | 28.15 | 30.98 | 39.78 | 30.83 | 31.88 |

| Mistral-Medium-3 | 44.74 | 36.57 | 37.75 | 29.33 | 36.59 | 45.78 | 33.75 | 37.78 |

| Mean | 39.85 | 31.05 | 31.85 | 28.06 | 42.47 | 44.14 | 31.25 | 35.53 |

| SOTA proprietary VLMs | ||||||||

| Gemini-2.5-Flash | 82.46 | 67.04 | 78.15 | 63.05 | 85.24 | 71.39 | 52.08 | 71.34 |

| Sonnet-4.0 | 64.25 | 41.00 | 53.31 | 44.87 | 48.44 | 58.04 | 44.17 | 50.58 |

| GPT-4.1 | 46.27 | 43.21 | 44.37 | 44.87 | 69.85 | 66.21 | 46.25 | 51.58 |

| o3 | 84.87 | 68.98 | 82.78 | 67.16 | 88.98 | 74.66 | 50.42 | 73.98 |

| Gemini-3.0-Pro | 92.54 | 81.16 | 91.72 | 80.94 | 94.59 | 91.28 | 72.08 | 86.33 |

| GPT-5.0 | 91.23 | 76.45 | 85.10 | 72.73 | 89.40 | 75.20 | 58.75 | 78.41 |

| Mean | 76.94 | 62.97 | 72.57 | 62.27 | 79.42 | 72.80 | 53.96 | 68.70 |

This study documents systematic challenges in VLMs' Vietnamese multimodal reasoning through comprehensive evaluation across 7 academic domains, demonstrating that failures stem from multimodal integration rather than basic text recognition limitations.

SOTA VLMs achieved only 57.74% accuracy on Vietnamese multimodal exam questions while open-source models averaged 27.70%, both underperforming average human test-takers (66.54%).

VLMs consistently struggle with Vietnamese multimodal content integration, with thinking models like o3 substantially outperforming non-thinking models (74.07% vs 48.28-59.87%), suggesting that future work on tool-augmented reasoning is needed to close this gap and achieve better performance on complex reasoning tasks